Ep. 11 - Vibe Coding with Guardrails

How AI Engineers can ship fast without breaking prod

Maya, a founding engineer at a 6-person startup, let her AI coding agent build the entire MVP in two weeks.

The agent shipped 3,000 lines of beautifully formatted code. It compiled. Tests passed.

Her small team reviewed what they could and merged it to main. Investors loved the demo. They raised their seed round.

Two weeks after launch, production breaks.

Customer data leaks through an edge case the AI missed. Their security contractor finds hardcoded credentials buried in a utility function.

And that “working” checkout feature? It doesn’t actually handle the edge cases the product team specified. The AI built what seemed right, not what was asked for.

Now Maya’s explaining to her co-founders why they’re rolling back weeks of AI-generated code while early customers churn.

But it gets worse.

Three months later, the engineering team is stuck. The AI-generated codebase has no consistent patterns.

Every module solves problems differently. There’s no clear architecture. New features take twice as long because developers spend more time decoding AI logic than building.

Maya thought she was moving fast. She was actually accruing technical debt at AI speed.

This is the dark side of vibe coding and it’s happening right now at companies rushing to adopt AI agents without guardrails.

Why This Is Getting Harder, Not Easier

Maya’s story isn’t happening because AI coding tools are bad. It’s happening because they’re getting very good, very fast.

I’ve been using Claude Opus 4.5 in VS Code and Cursor, and the velocity is real. With a few prompts, entire features and even full applications appear. The code looks clean. It compiles. It passes tests.

Scroll X for a few minutes and you’ll see people shipping serious software with minimal human input.

That’s the risk.

Claude doesn’t just speed up coding. It lets you generate large volumes of plausible code before you’ve fully validated intent, architecture, or edge cases. The gap between “it works” and “it’s correct” widens quietly, often unnoticed until production.

This isn’t a knock on Claude. It’s one of the best coding agents available. But AI doesn’t slow down when requirements are vague. It doesn’t push back on unclear assumptions. It fills in the gaps confidently and moves on.

Without guardrails, that confidence becomes invisible risk.

Security issues slip through. Architecture erodes. Code grows faster than shared understanding.

So the real question isn’t whether AI is production-ready.

It’s whether your process is

The “Vibe Coding” Debate (And Why Both Sides Are Right)

The term vibe coding was popularized by Andrej Karpathy in early 2025 to describe a shift in how software gets built: instead of writing code line by line, developers guide AI through natural language, iterating conversationally while the model handles implementation.

When it works, it feels like a superpower.

But the criticism is fair. Uncontrolled vibe coding isn’t productivity. It’s speed without intent. You get features without architecture, output without guarantees, and code that “works” until it doesn’t.

Still, dismissing vibe coding entirely misses the point. The problem isn’t the vibe. It’s the absence of guardrails.

With the right constraints, vibe coding becomes leverage. AI handles execution. Humans stay accountable for intent, structure, and risk.

In short:

vibe coding isn’t dangerous. Vibe coding without discipline is.

And that’s exactly where most teams get stuck. They swap keystrokes for prompts but never change the process. Which brings us to the real fix.

What GitHub Gets Right About AI Safety

One thing GitHub has figured out early is this: if AI is going to generate code at scale, security can’t be optional or manual.

That’s why many of the most important guardrails for AI-generated code already live directly in the GitHub workflow:

Secret scanning catches hardcoded credentials before they ever reach production. This is exactly the kind of mistake AI models make quietly and confidently.

Code scanning surfaces vulnerabilities and insecure patterns automatically, not during a rushed human review.

Dependency monitoring keeps AI-written code from pulling in vulnerable packages and forgetting about them.

Branch protection and required checks ensure AI doesn’t delete the main branch accidently.

Policy enforcement at merge time blocks risky changes even when the code “looks fine.”

None of these tools make AI smarter.

They make failure harder to ship.

That’s the key distinction. When AI accelerates output, humans can’t rely on intuition and spot checks anymore. You need automated systems that assume mistakes will happen and catch them by default.

But here’s the catch.

GitHub’s security features are excellent at enforcing rules. They don’t enforce intent.

They can tell you if code is dangerous. They can’t tell you if it’s wrong.

Which is why guardrails alone don’t solve the Maya problem. They prevent obvious disasters, but they don’t guarantee the AI built what the product actually needed.

That’s where spec-driven development enters the picture.

What Is Spec-Driven Coding (And Why It Matters Now)

Here’s the problem with AI coding today: You describe what you want, get a block of code back, and it looks right... but doesn’t quite work.

Sometimes it doesn’t compile. Sometimes it solves part of the problem but misses your actual intent. Sometimes the stack choices make no sense for your architecture.

This isn’t a model capability problem. It’s a process problem.

We’re treating coding agents like search engines when we should treat them like literal-minded pair programmers. They’re exceptional at pattern recognition but need unambiguous instructions.

That’s where spec-driven development changes the game.

Instead of coding first and hoping the AI figures it out, you flip the script:

Start with a specification that becomes the shared source of truth.

Think of it as a contract. The spec defines what your code should do. The AI generates implementation that provably satisfies that contract. Less guesswork. Fewer surprises. Higher quality code. This spec can evolve over time.

How Spec-Driven Development Actually Works

GitHub just open-sourced Spec Kit. It is a toolkit that brings spec-driven development to any coding agent workflow (Copilot, Claude Code, Gemini CLI, whatever you’re using).

In simple words, this makes planning and design a lot easier and robust when building software applications.

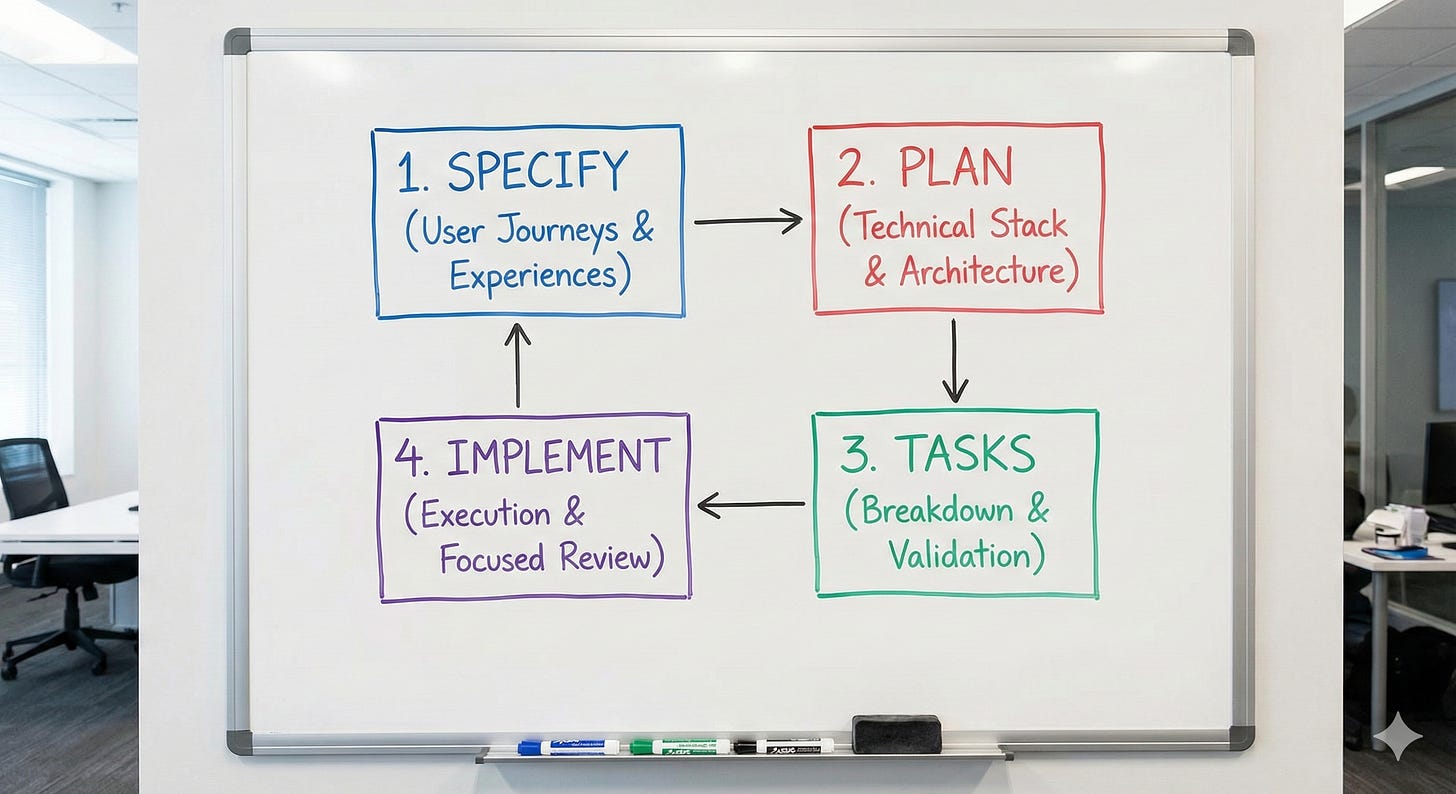

Here’s how the process breaks down:

1. Specify (User Journeys & Experiences)

You provide a high-level description of what you’re building and why, and the coding agent generates a detailed specification. This isn’t about technical stacks or app design.

It’s about user journeys, experiences, and what success looks like. Who will use this?

What problem does it solve for them? How will they interact with it? What outcomes matter?

Think of it as mapping the user experience you want to create, and letting the coding agent flesh out the details. Crucially, this becomes a living artifact that evolves as you learn more about your users and their needs.

2. Plan (Technical Stack & Architecture)

Now you get technical. In this phase, you provide the coding agent with your desired stack, architecture, and constraints, and the coding agent generates a comprehensive technical plan.

If your company standardizes on certain technologies, this is where you say so.

If you’re integrating with legacy systems, have compliance requirements, or have performance targets you need to hit … all of that goes here.

You can also ask for multiple plan variations to compare and contrast different approaches.

If you make your internal docs available to the coding agent, it can integrate your architectural patterns and standards directly into the plan.

After all, a coding agent needs to understand the rules of the game before it starts playing.

3. Tasks (Breakdown & Validation)

The coding agent takes the spec and the plan and breaks them down into actual work.

It generates small, reviewable chunks that each solve a specific piece of the puzzle.

Each task should be something you can implement and test in isolation; this is crucial because it gives the coding agent a way to validate its work and stay on track, almost like a test-driven development process for your AI agent.

Instead of “build authentication,” you get concrete tasks like “create a user registration endpoint that validates email format.”

4. Implement (Execution & Focused Review)

Your coding agent tackles the tasks one by one (or in parallel, where applicable).

But here’s what’s different: instead of reviewing thousand-line code dumps, you, the developer, review focused changes that solve specific problems.

The coding agent knows what it’s supposed to build because the specification told it. It knows how to build it because the plan told it. And it knows exactly what to work on because the task told it.

Referenced from this blog, also highly recommend watching this Youtube demo of Spec Kit here

Why This Works When Vague Prompting Fails

Language models are great at pattern completion. They’re terrible at mind reading.

When you prompt “add photo sharing to my app,” you’re forcing the model to guess at thousands of unstated requirements. Some assumptions will be wrong. You won’t discover which ones until deep into implementation.

With spec-driven development:

The specification captures your intent clearly

The plan translates it into technical decisions

The tasks break it into implementable pieces

The AI handles the actual coding

The result? The AI isn’t guessing. It’s executing against explicit requirements.

This is especially powerful for three scenarios:

Greenfield projects → A small amount of upfront spec work ensures the AI builds what you actually intend, not a generic solution based on common patterns.

Feature work in existing systems → Creating a spec for new features forces clarity on how they interact with existing code. The plan encodes architectural constraints so new code feels native, not bolted-on.

Legacy modernization → Capture essential business logic in a modern spec, design fresh architecture in the plan, then let AI rebuild the system without carrying forward technical debt.

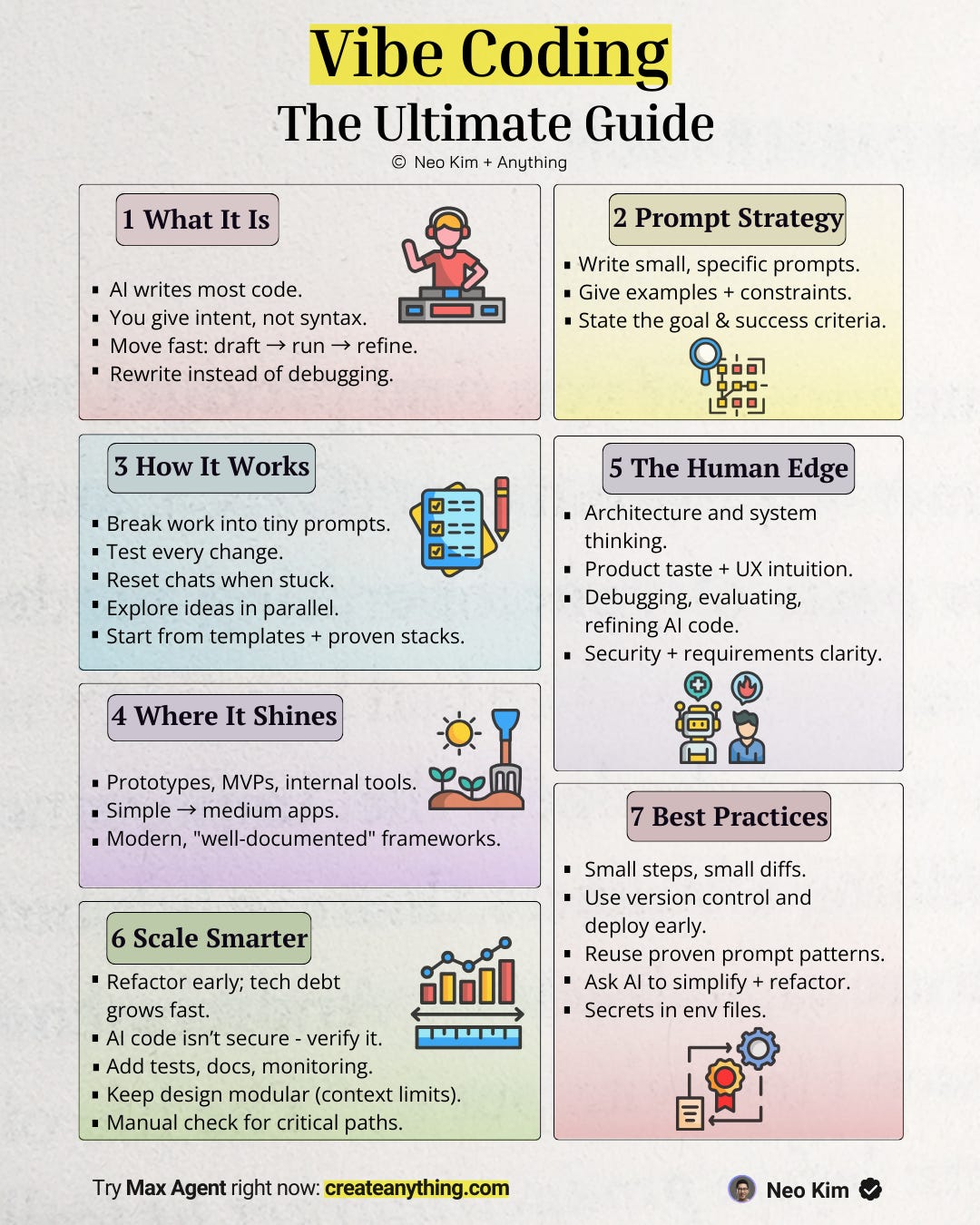

Here is Neo Kim ’s essential "Vibe Coding Cheatsheet," offering practical tips for coding with AI agents.

The Bottom Line

Software engineering is being democratized at an unprecedented pace, and that trend will only accelerate in 2026.

But democratization without discipline quickly turns into chaos.

The winners won’t be the people who can write the cleverest prompts. They’ll be the engineers who pair AI’s speed with architectural rigor, strong security practices, and clear, spec-driven validation.

The future belongs to software architects who know how to direct AI agents to build real software that delivers immediate value and solves meaningful problems in society.

References: