Ep. 2 –Winning with AI CoEs in the Agentic Era

Part 1: The Enterprise AI CoE Formula: Platform + People + Patterns

If your AI CoE can’t cut time-to-build in half, it’s not a CoE. It’s a roadblock.

Over the past few months, I’ve been in discussion with business leaders wrestling with the same challenge: how to turn scattered AI experiments into scalable enterprise capability.

Here’s the truth! Most GenAI programs don’t fail because of the model. They fail because the enterprise lacks a repeatable platform architecture and a playbook for scale.

In this 4-part blog series, I aim to provide the key principles to building a successful AI CoE in the Agentic Era. Think of this as a map. It can give direction, but it does not have to be rigid or uniform. Each organization can and should customize its own path. What stays constant are the principles that define a successful AI CoE.

The three true multipliers for AI COE are platform, people and patterns.

1. Platform

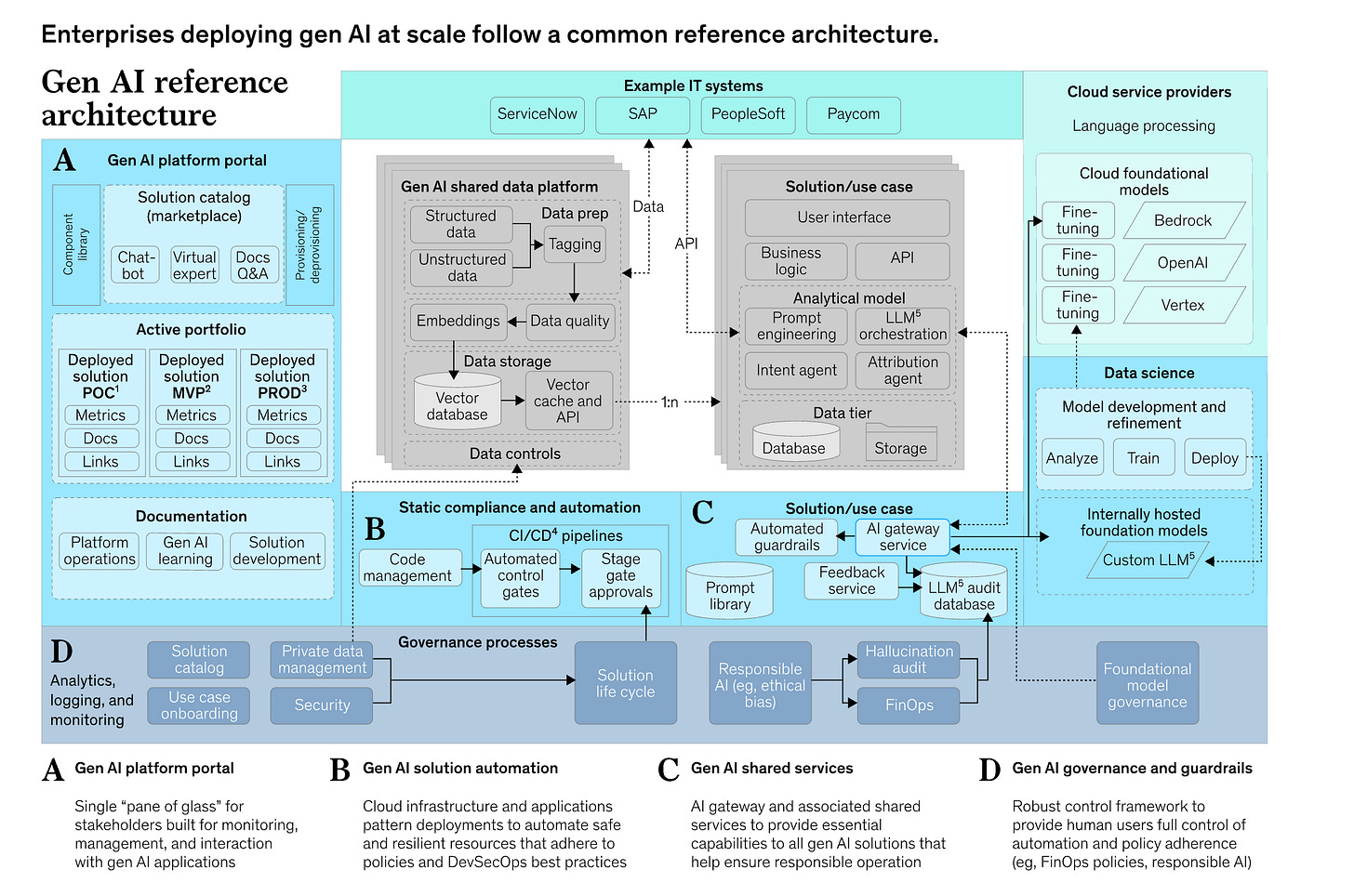

McKinsey’s latest reference architecture makes this clear: the foundation isn’t the model; it’s the platform a portal, reusable services, and automated guardrails.

This is a great reference architecture that AI COEs must use as a guiding principle for platform design

So, what should a real AI CoE own when it comes to platforms?

An AI gateway and governance checks that safeguard the enterprise

Golden paths like chatbot frameworks, Docs Q&A, AI Landing Zones

and agent workflow archetypes

Evals-as-code to automate safety and compliance instead of relying on manual review

Self-service portals so business teams can go from idea to build in hours, not months

FinOps discipline to keep innovation cost-effective and sustainable

An AI CoE that just evangelizes use cases isn’t doing its job. The CoE must own the enterprise AI platform and act as the product owner for it. Get that right, and you unlock governance, scale, and speed at the same time.

💡 My take: A true AI CoE is a multiplier. It doesn’t just showcase shiny demos; it converts scattered innovation into hundreds of production-ready workloads. The CoE is not just a think tank. It is the product owner for the enterprise AI platform.

2. People: Building the Right AI CoE Team

An AI CoE isn’t just a governance committee, it’s a multiplier for adoption. To work, it needs leadership, organizational alignment, and a clear operating model. Early on, centralization provides consistency and guardrails; later, the CoE shifts into an advisory mode, enabling business units to build responsibly on top of a secure foundation.

But structure alone isn’t enough. What matters most are the people you put in the room.

You can buy GPUs, build platforms, and automate pipelines, but none of it matters if you don’t have the right people in the right seats. AI success isn’t a tooling problem; it’s an operating model problem.

The difference between a bottleneck CoE and a multiplier CoE comes down to how you design the team and empower it to move.

It starts with executive sponsorship that gives the CoE authority, not just a title. Then comes the right AI leadership layer, such as a CAIO or Head of AI, to align technology, business, and responsible AI governance.

From there, the core roles make or break the engine: platform product managers who treat the AI stack like a product, solution architects who build on golden paths, agent engineers who design autonomous systems that actually do things, security and evals engineers who harden and govern AI responsibly, automate safety and compliance, FinOps analysts who keep innovation cost-effective, and factory champs who push AI adoption across every business unit.

In short, platforms set the rails but people decide whether the train moves or derails.

I will provide details on building the right CoE team in part-3 of this blog series.

3. Patterns

Platforms give you the rails.

People provide the force multiplier.

But without patterns, there’s no repeatability.

Every enterprise starts with one-off experiments like an HR chatbot here, Doc Q&A, a GraphRAG proof of concept there. Without patterns, each build is bespoke, expensive, and impossible to scale.

Patterns are the codebase of the CoE. They capture what worked once and make it reusable everywhere.

Good patterns include:

Golden Paths — pre-validated architectures for chat, Doc Q&A, or multi-agent workflows.

Guardrails Built-In — evals, compliance, and observability wired directly into the pattern.

Lifecycle Discipline — design, validate, publish, and retire outdated templates so teams don’t repeat old mistakes.

Infrastructure-as-Code and Policy-as-Code bring these ideas to life, automating governance and reproducibility. Together, they make AI deployment faster, safer, and more consistent.

The best CoEs act like open-source maintainers: patterns live, evolve, and sometimes get retired. Get this right, and you scale AI responsibly with speed, safety, and clarity.

This is Part 1 of a 4-part series. In the next three posts, I’ll break down the three multipliers of a winning CoE: Platform, People, and Patterns.

If you found this useful, support my work by following Diary of an AI Architect on Substack and Linkedin, where I share practical playbooks, architectures, and insights to help you turn AI hype into enterprise reality.

#ai #aicoe #agenticai #azureai #microsoftai #enterprisearchitecture #aiarchitecture